Jim's AI Talk to AASHTO Committee on Traffic Engineering 2025

From Palm Pilots to AI hallucinations, why DOTs face more words than numbers—and why solving the digital filing cabinet problem matters most.

Transcript (edited by A.I.)

Last year in Portland, we talked about a lot: my hypothesis that AI is bad, AI is good, AI is misunderstood. I still believe that. I used the analogy: today’s AI is like the Internet in 1998. We went from dial-up to broadband, video, mobile, social, and cloud. It’s easy to look back now, but remember how chaotic it felt during the “gurgling modem” days?

In 2000, Palm—makers of the Palm Pilot—was worth more than Apple, Nvidia, and Amazon combined. We’ve come a long way.

Glenn brought up copyright. Last year I talked about AASHTO’s need to generate revenue from technical publications. Naturally, they’re cautious about plugging proprietary content into LLMs. A lot of litigation is underway, and I suspect multiple Supreme Court cases will try to define what copyright means in the age of AI. AASHTO isn’t alone in this.

We also looked at the absurd: people asking AI how many rocks to eat, or complaining their cheese sticks to pizza and being told to add glue to the sauce. Autonomous vehicles misclassifying things. Vision algorithms mistaking signs, trucks, and people.

We talked about algorithmic feeds—Facebook, YouTube, TikTok—and how if they controlled your diet, you’d get chocolate cake for breakfast, lunch, dinner, and snacks. Because you like cake. What could possibly go wrong?

We reviewed two AI use cases: 1) distilling meeting transcripts—something we now do often, with about 550 transcripts recorded so far, despite legal questions—and 2) summarizing vendor proposals. That second one hasn’t evolved much yet. Jury’s still out.

That was last year. Today, in Des Moines, we’re covering: the time when Elvis met Napoleon, an owl, some broccoli, a filing cabinet, a glass of wine in front of a clock, the world’s longest cow, a DMS created by AI, and a heat map. Yes, AI is ridiculous. But it’s also solving real problems. I’ll show both.

You’ll hear people say, “It hallucinates, you can’t trust it.” The same was said about Google in the 1990s. Skepticism is healthy. But that didn’t stop Google from becoming a $3 trillion company.

What I hear from DOTs—your real problems—aren’t just connected vehicles and futuristic systems. They’re paperwork. Digital filing cabinets full of PDFs. “Control-F” is still the best way to search. That’s wild in 2025.

We were promised flying cars. We got “Control-F.”

Here’s my controversial hypothesis: in many DOT contexts, the words might matter more than the numbers. That’s the real problem. There are too many words. No one can read them all. No time, no capacity, and frankly, no will.

We think the digital filing cabinet problem is worth solving. AI is still bad, good, and misunderstood—only now, even more so. The highs are higher. The lows are lower. And it all moves faster.

AI costs are dropping. Models have gone from $100/million tokens to 10 cents. That’s like a $75,000 luxury car now costing $75. At the same time, performance is improving.

Some problems, though, persist. One survey asked AI experts if AI could cause a human catastrophe. One-third said yes. That’s sobering.

Just last week on CNN, the CEO of Anthropic said AI could eliminate half of all entry-level jobs and create 10–20% unemployment. That’s… not great. I’ve got college-aged kids. That worries me.

But let’s not panic. Missy Cummings, robotics professor and former fighter pilot, says generative AI “knows nothing, cannot reason, does not have intent.” Some of the alarm is just marketing. If you’re selling arms, you want people to feel afraid.

So, be skeptical, stay grounded.

AI can’t even draw humans on beaches correctly—doesn’t know what one foot looks like.

Here’s a DMS image generated by AI: the signpost is on the wrong side of the guardrail. My daughter pointed out the shadows are off, it’s low contrast, and the background vehicles are nonsense.

I asked ChatGPT for a fictional story of Napoleon meeting Elvis. It gave me one. The image looks plausible at first glance. The whole backstory sounds legit—until you remember it’s completely made up. Some people won’t notice.

Google Pixel can now generate fake potholes. Take a photo, click “add road damage,” and it’s done. Feels like DOT’s fault now, right?

There’s also stuff like “vegetative electron microscopy,” which doesn’t exist but is now cited in real papers because an AI hallucinated it from a bad scan of a 1959 article.

AI still can’t tell time on analog clocks. A request for “a full glass of wine and a clock showing 6:30” returns wine not filled to the brim and a clock showing… who knows what. Could be 10:08, could be 1:50.

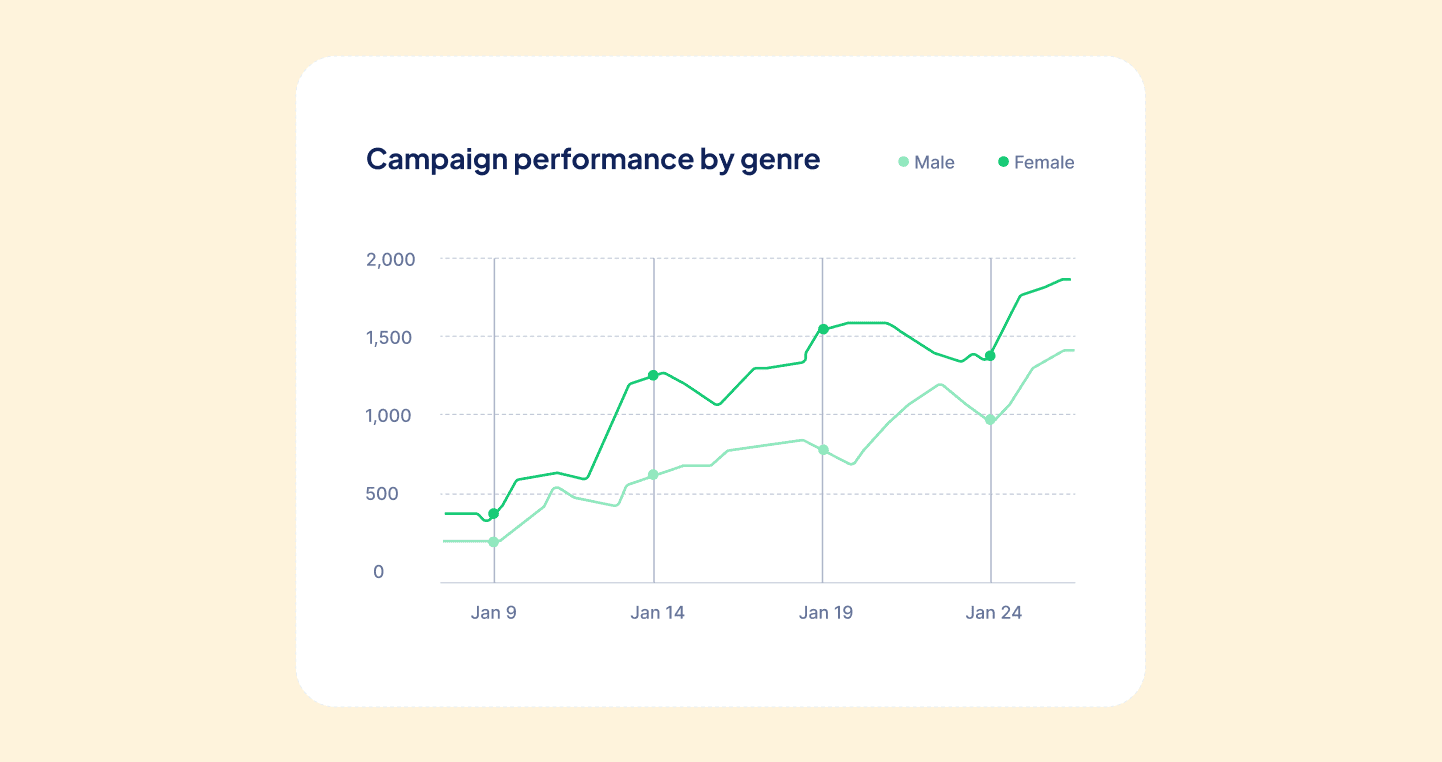

Math? Not great either. A heatmap showed LLMs doing fine on small multiplications, but failing on big ones. I checked in Excel—it got 100%. Of course. Because math.

LLMs aren’t doing math. They’re regurgitating what others said math is. That’s fine for common problems; not so great for anything unusual.

Another case: Google Gemini gave the wrong answer for πr². Missed the area of a circle by thousands of square inches. You’d never tolerate that from an EIT.

This is the world’s longest cow: 5.4 meters. Except it’s not. But the same logic led a Cruise vehicle to rear-end an articulated bus. The system didn’t expect a bus to be that long. It was confused.

I don’t focus much on autonomous vehicles, but I can’t avoid it. They’re critical to conversations on AI and safety. Waymo’s going slowly and responsibly. GM’s Cruise, meanwhile, pulled back after incidents. It’s not a game for underfunded players.

In China, Xpeng’s equivalent (I believe it’s pronounced Shimmy) is moving fast, with some tragic results. Fatal crashes suggest they’re pushing too far, too fast.

Aurora in Texas rolled out self-driving trucks—then two weeks later, put drivers back behind the wheel. It’s a whiplash cycle.

Tesla’s supposed to launch a RoboTaxi soon—no LIDAR, just cameras. Tremendous safety implications. Nobody knows what to expect.

Philip Koopman, Carnegie Mellon prof, says we’re beta testing cars on public roads. We didn’t sign up for that. That’s a real concern.

Let’s talk Venn diagrams: roads, vehicles, people, policies. These don’t overlap neatly. People matter, even when they’re not in vehicles. But AVs don’t have people inside them. So where do they fit?

The private sector can’t solve this alone. It’s profit-motivated, and I am too. But we need balance. DOTs have a critical role.

What can you actually do? Here’s where I stop drawing owls and give three actionable tips:

1. Change your default desktop search engine to ChatGPT (or any LLM).

Not just play with it—make it your default. That forces behavior change. I tried it for a week. Never went back. Like going from AltaVista to Google in 1999. Do it for a week. It’s different. It’s better.

2. Use advanced AI agents to ask complex questions.

Don’t just ask for facts—task the model with a project. I asked one to help build a new engineering firm. It gave me logos, a website, a business model, and a plan.

I then tried something more relevant: write a Strategic Highway Safety Plan. I’m not a traffic engineer, so I asked ChatGPT to help frame the prompt. It did. Then it generated:

- Crash trend snapshots

- State-specific analysis

- Emphasis areas and strategies

- Sample language

- Next steps

All in 1:28. Impressive. Quality? Variable. Quantity? Astounding. Speed? Undeniable.

3. Have interns use AI agents to tackle real business problems.

Florida did this. Interns, with a little IT support, explored what could be done. No need to build a whole software stack—just see what’s possible. Then, share your findings. Build the case. Overcome procurement, legal, and security hurdles. Show value.

Use cases we’re working on:

- TMC SOPs (633 pages) + incident inputs: “What do I do with a tractor trailer overturned here?”

- Engineering cost estimation: Too many Excel sheets, no synthesis.

- Traffic policy discovery (TEPAL): 2000 PDFs, 95 documents on school zones. You need analysis, not Ctrl-F.

AI can help—but only with supervision. It’s not plug-and-play. But 50–70% useful output in seconds is a good start.

This is all still in pilot stage. We’re just getting into the starting blocks. Can’t finish the race until you start.

Final thought: consulting + software = opportunity.

Consultants know the domain. We know how to build scalable tools. Together, we can solve real problems—faster, better.

To close:

- Costs are dropping exponentially

- You only see 10% of what’s going on in AI—the rest is underwater

- Regulation is needed

- Successes and failures will happen

- The trend? That’s up to us

Reframed conclusion:

Change comes fast.

Answers come slow.

We still have a long way to go.

Thanks.

Emphasize your product's unique features or benefits to differentiate it from competitors

In nec dictum adipiscing pharetra enim etiam scelerisque dolor purus ipsum egestas cursus vulputate arcu egestas ut eu sed mollis consectetur mattis pharetra curabitur et maecenas in mattis fames consectetur ipsum quis risus mauris aliquam ornare nisl purus at ipsum nulla accumsan consectetur vestibulum suspendisse aliquam condimentum scelerisque lacinia pellentesque vestibulum condimentum turpis ligula pharetra dictum sapien facilisis sapien at sagittis et cursus congue.

- Pharetra curabitur et maecenas in mattis fames consectetur ipsum quis risus.

- Justo urna nisi auctor consequat consectetur dolor lectus blandit.

- Eget egestas volutpat lacinia vestibulum vitae mattis hendrerit.

- Ornare elit odio tellus orci bibendum dictum id sem congue enim amet diam.

Incorporate statistics or specific numbers to highlight the effectiveness or popularity of your offering

Convallis pellentesque ullamcorper sapien sed tristique fermentum proin amet quam tincidunt feugiat vitae neque quisque odio ut pellentesque ac mauris eget lectus. Pretium arcu turpis lacus sapien sit at eu sapien duis magna nunc nibh nam non ut nibh ultrices ultrices elementum egestas enim nisl sed cursus pellentesque sit dignissim enim euismod sit et convallis sed pelis viverra quam at nisl sit pharetra enim nisl nec vestibulum posuere in volutpat sed blandit neque risus.

Use time-sensitive language to encourage immediate action, such as "Limited Time Offer

Feugiat vitae neque quisque odio ut pellentesque ac mauris eget lectus. Pretium arcu turpis lacus sapien sit at eu sapien duis magna nunc nibh nam non ut nibh ultrices ultrices elementum egestas enim nisl sed cursus pellentesque sit dignissim enim euismod sit et convallis sed pelis viverra quam at nisl sit pharetra enim nisl nec vestibulum posuere in volutpat sed blandit neque risus.

- Pharetra curabitur et maecenas in mattis fames consectetur ipsum quis risus.

- Justo urna nisi auctor consequat consectetur dolor lectus blandit.

- Eget egestas volutpat lacinia vestibulum vitae mattis hendrerit.

- Ornare elit odio tellus orci bibendum dictum id sem congue enim amet diam.

Address customer pain points directly by showing how your product solves their problems

Feugiat vitae neque quisque odio ut pellentesque ac mauris eget lectus. Pretium arcu turpis lacus sapien sit at eu sapien duis magna nunc nibh nam non ut nibh ultrices ultrices elementum egestas enim nisl sed cursus pellentesque sit dignissim enim euismod sit et convallis sed pelis viverra quam at nisl sit pharetra enim nisl nec vestibulum posuere in volutpat sed blandit neque risus.

Vel etiam vel amet aenean eget in habitasse nunc duis tellus sem turpis risus aliquam ac volutpat tellus eu faucibus ullamcorper.

Tailor titles to your ideal customer segment using phrases like "Designed for Busy Professionals

Sed pretium id nibh id sit felis vitae volutpat volutpat adipiscing at sodales neque lectus mi phasellus commodo at elit suspendisse ornare faucibus lectus purus viverra in nec aliquet commodo et sed sed nisi tempor mi pellentesque arcu viverra pretium duis enim vulputate dignissim etiam ultrices vitae neque urna proin nibh diam turpis augue lacus.