Built for Evolving DOT Needs

AI That Keeps People Moving

The next generation of transportation has arrived.

People have places to be.

So do the people getting them there.

Beacon's AI-powered solutions help transportation pros and agencies make faster, safer decisions, where and when they matter most.

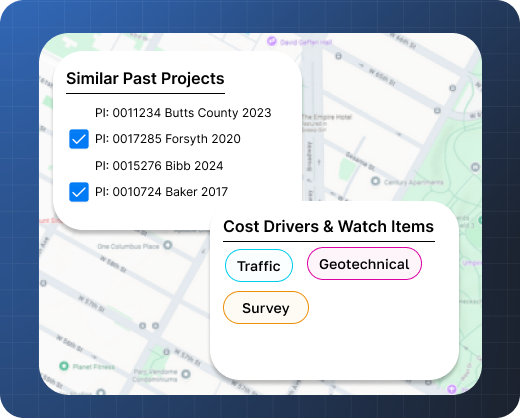

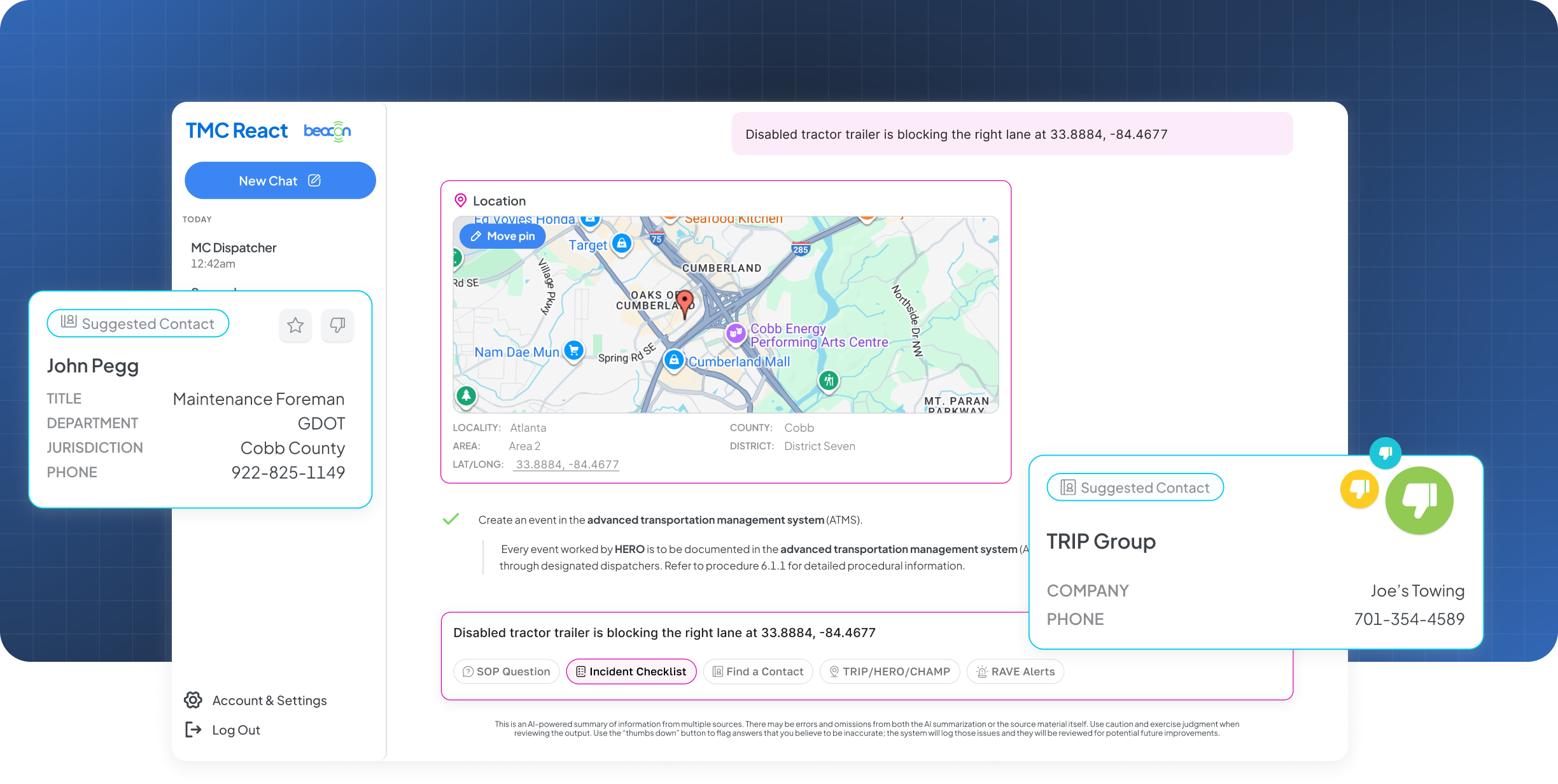

All Signal, No Noise

No more endless lists of search results. Beacon synthesizes data, documents, and transcripts into the answer you need to make the call.

.png)

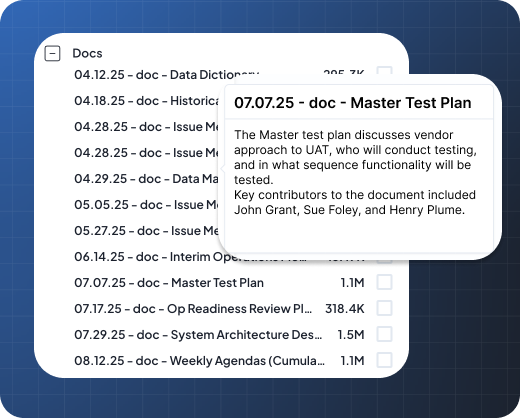

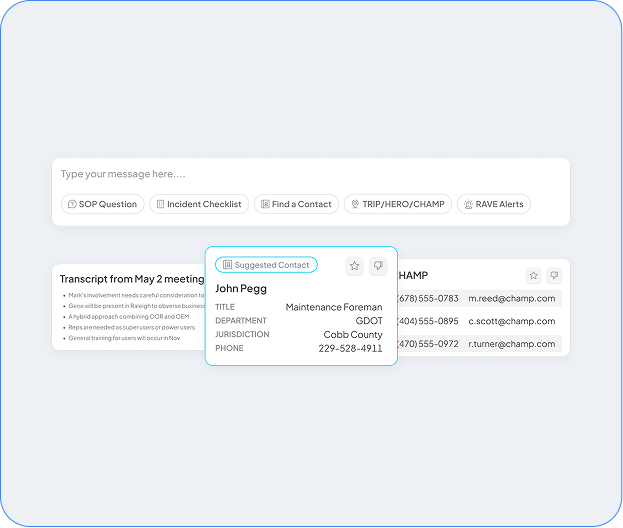

Every Answer, Surfaced Instantly

Contacts, meeting transcripts, and 600-page SOPs, all stored in one searchable repository.

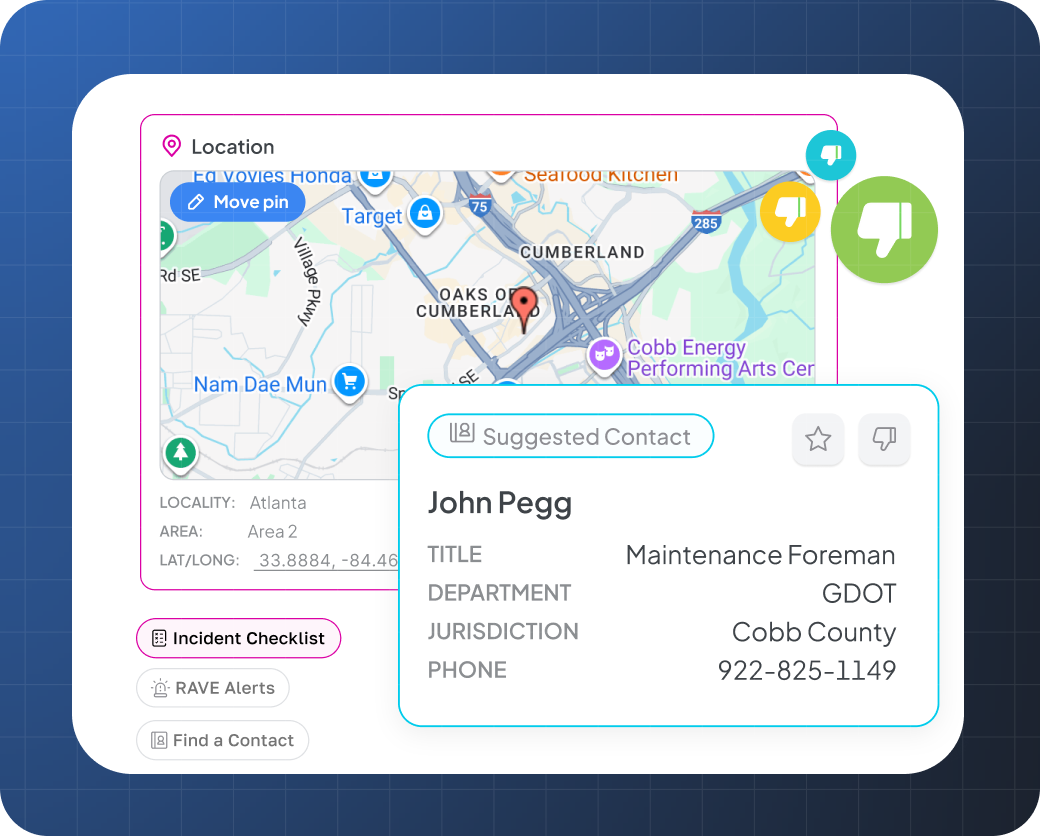

Location Context, Built In

From engineering estimates to emergency response, Beacon maps people, projects, and context—giving you location clarity when it matters most.

.png)

.png)

Audit-Ready Traceability

Each key stroke is logged, giving managers an instant log for review.